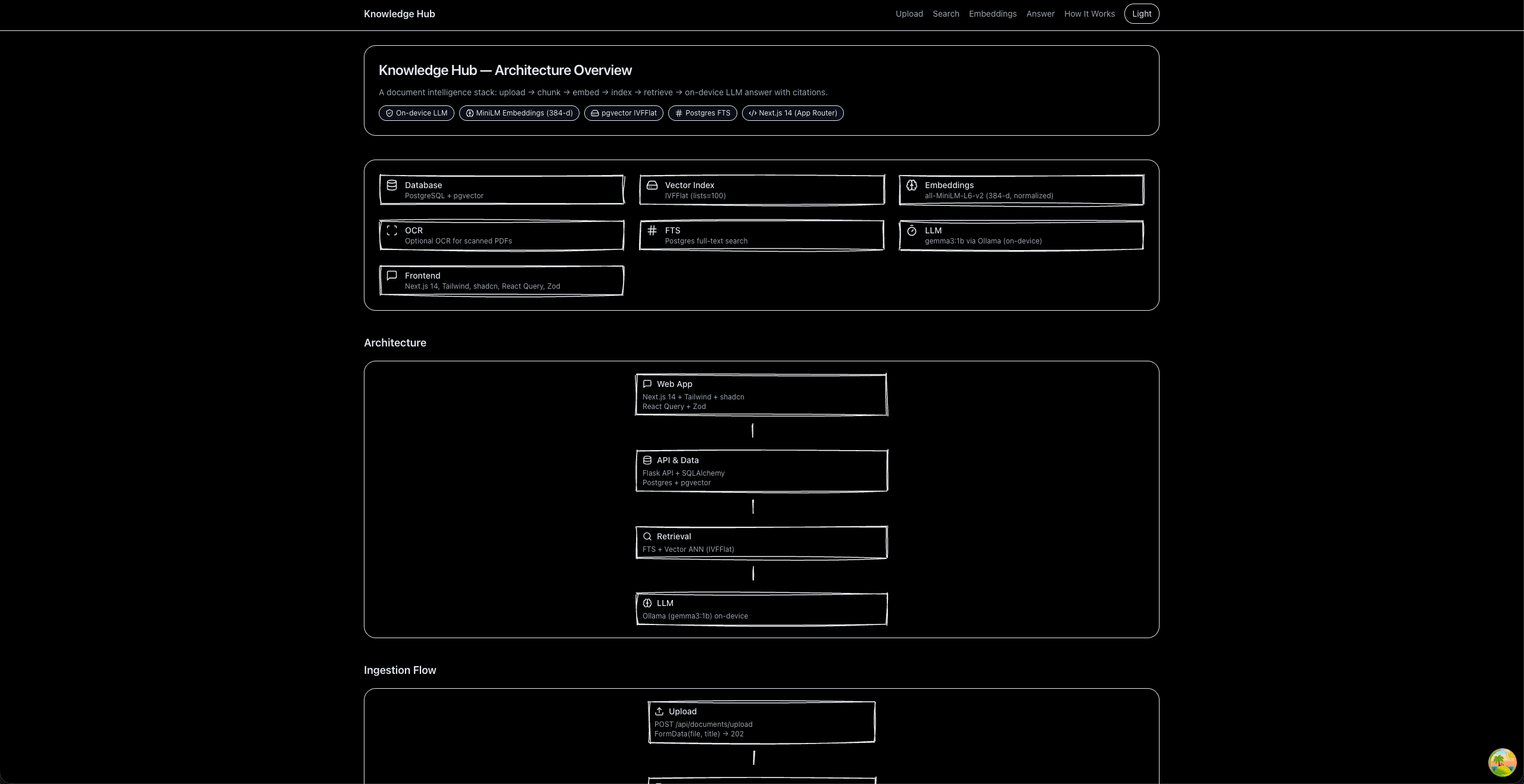

Knowledge Hub - AI-Powered Document Management System

A comprehensive document management and ingestion service built with Flask, SQLAlchemy, and Postgres+pgvector. Features OCR capabilities, semantic search, and AI-powered question answering for academic research and homework preparation.

How It Works

Project Overview

Knowledge Hub is a sophisticated document management system I developed to streamline my MS in CS coursework at USC. This project addresses the common challenge of managing and searching through vast amounts of academic materials, research papers, and course documents. The system combines modern web technologies with AI capabilities to provide intelligent document processing, semantic search, and question-answering functionality. Built with Flask and PostgreSQL with pgvector extension, it offers both traditional full-text search and advanced vector-based semantic search. Key features include automated OCR processing for PDFs and images, intelligent document chunking, vector embeddings for semantic search, and integration with local LLM (Ollama) for AI-powered question answering. The system is containerized with Docker for easy deployment and includes comprehensive API endpoints for document management. This project demonstrates my expertise in full-stack development, AI/ML integration, database design, and system architecture. It has significantly improved my academic workflow by enabling quick retrieval of relevant information from course materials and research papers.

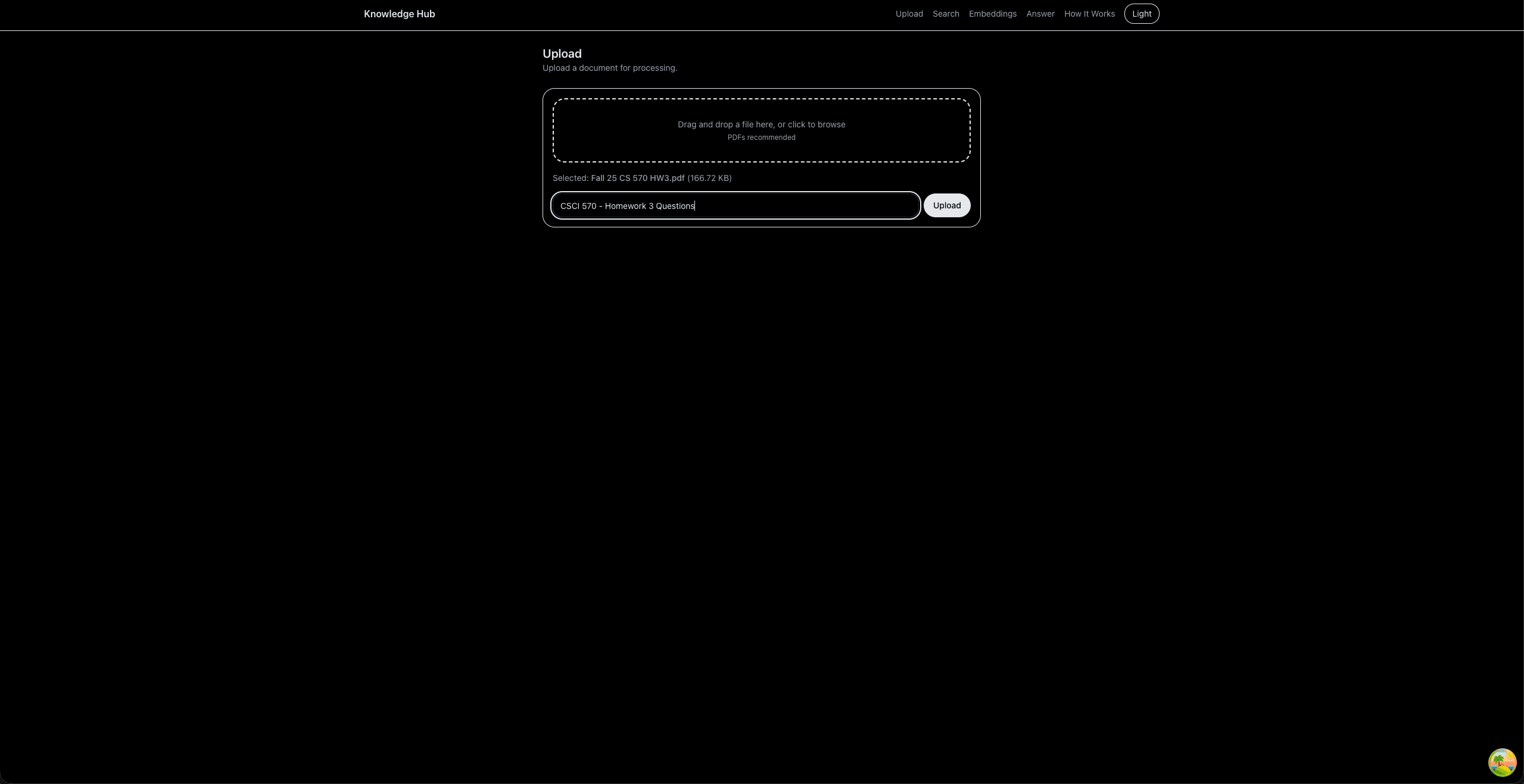

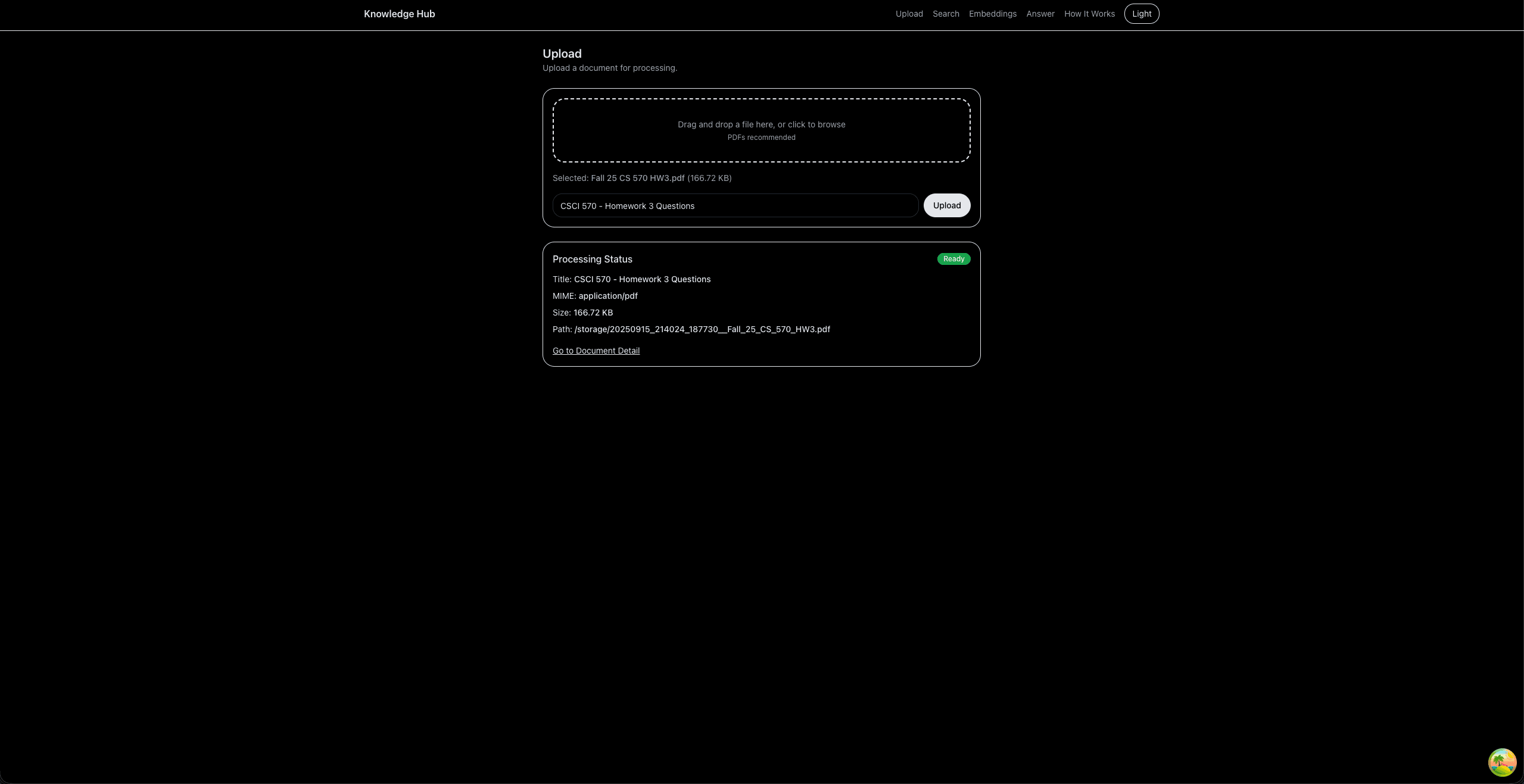

Document Upload & Processing

Key Features

Document Management

Upload, store, and organize documents with automatic metadata extraction and categorization

AI-Powered OCR

Automatic text extraction from PDFs and images using OpenCV, PyMuPDF, and Tesseract

Semantic Search

Vector-based similarity search using pgvector and Sentence-Transformers for intelligent content discovery

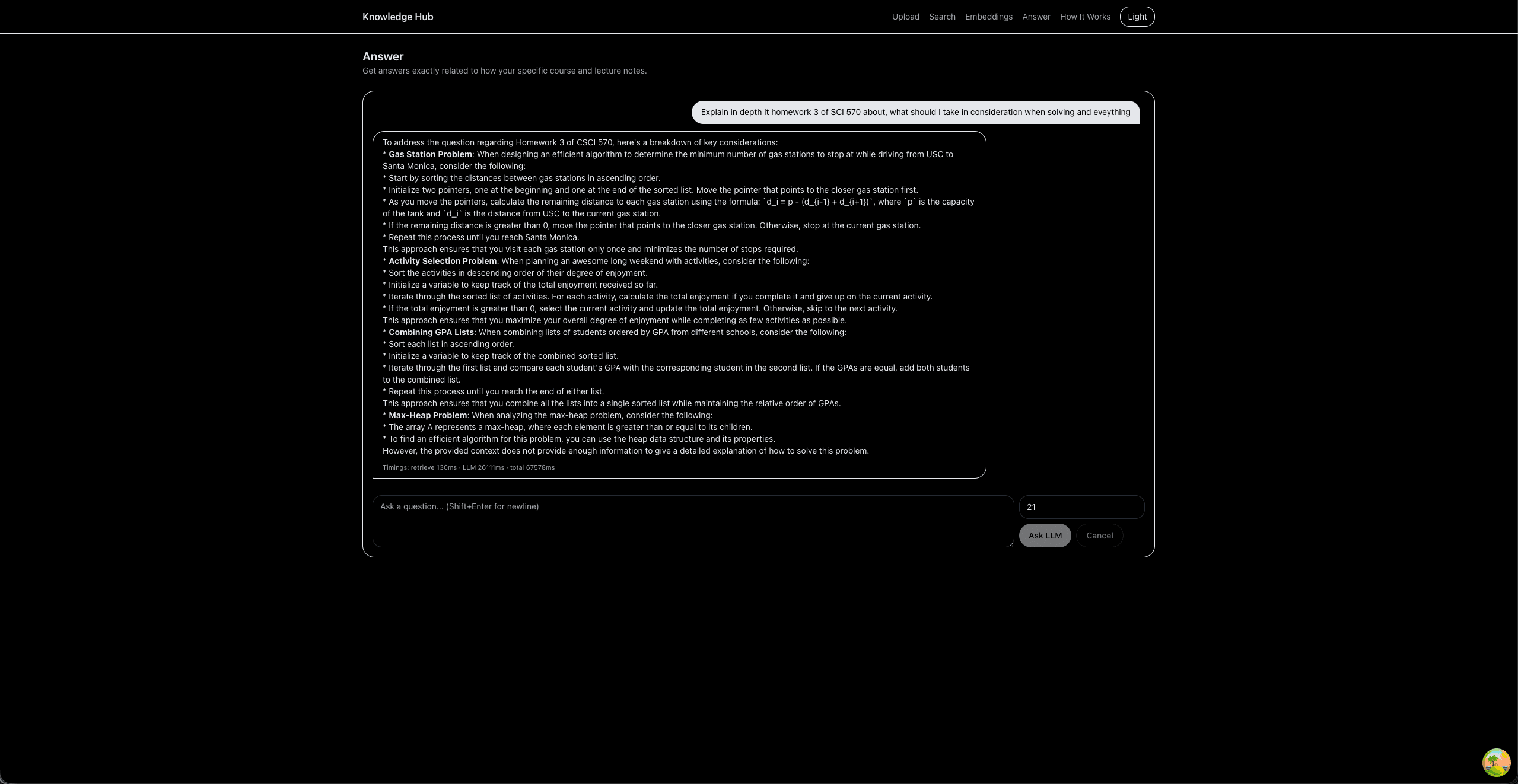

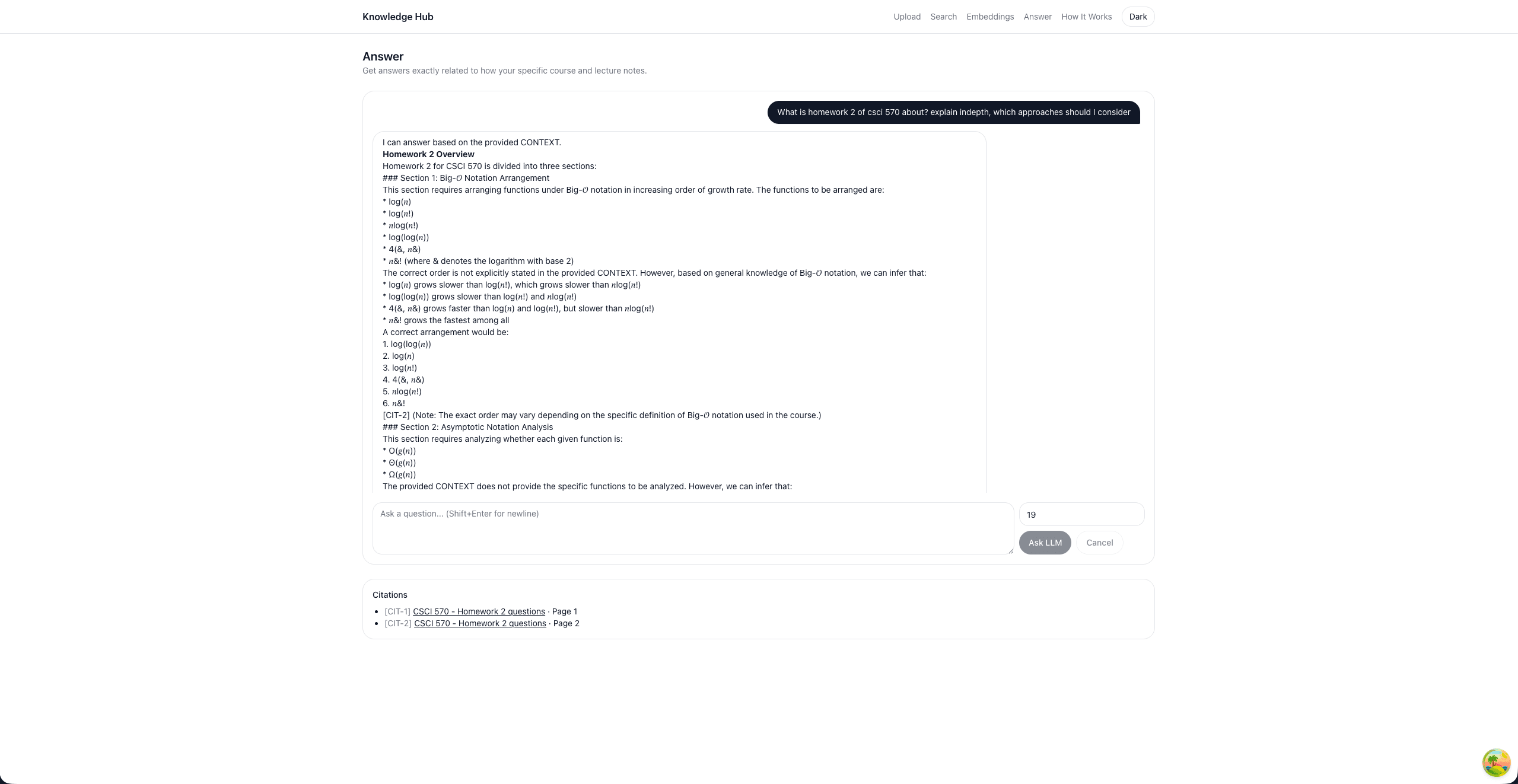

Question Answering

RAG-powered Q&A system with local LLM integration for contextual answers with citations

AI-Powered Question Answering

Technical Implementation

- Flask REST API with SQLAlchemy ORM for backend services

- PostgreSQL with pgvector extension for vector similarity search

- Docker containerization for easy deployment and scaling

- OCR processing with OpenCV, PyMuPDF, and Tesseract

- Vector embeddings using Sentence-Transformers (all-MiniLM-L6-v2)

- Local LLM integration with Ollama (gemma3:1b)

- Hybrid search combining full-text and semantic search

- Intelligent document chunking (300-700 tokens with overlaps)

- Confidence-aware ranking for OCR results

- RESTful API with comprehensive endpoints for document management

Challenges Faced

- Implementing efficient vector search with large document collections

- Optimizing OCR processing for various document types and quality

- Designing hybrid search algorithms for optimal relevance ranking

- Managing memory requirements for vector embeddings and LLM inference

- Creating intuitive API design for complex document operations

Key Learnings

- Advanced database design with vector extensions

- AI/ML integration in production web applications

- Document processing and OCR optimization techniques

- Vector database operations and similarity search algorithms

- RAG (Retrieval-Augmented Generation) system implementation

- Container orchestration and deployment strategies

Technical Documentation

Knowledge Hub Technical Deep Dive

Comprehensive technical documentation covering architecture, implementation details, and system design decisions.